Creating the Motorway iOS app and app clip to decrease the seller drop-off rate

Senior Product Designer | App | 2021

Overview

In 2021 I was part of the team of 3 that created the MVP for the Motorway iOS app.

Motorway require users to take very specific photos of their vehicle. Dealers do not see the vehicle in person before making an offer, the photos captured in the specified way helps dealers bid with confidence. Enforcing sellers to take photos in a certain way is a large painpoint with our sellers.

Utilising the then brand new technology iOS app clips, we would be able to utilise native camera functionality whilst also avoiding the friction of needing sellers to download a full app.

MVP was just to focus on improving the photo taking process, to hask out the basics keeping in mind that down the line we will be able to include AI image assist, along with the full selling process.

Goal: improve photo quality, decrease time taken from 1st photo uploaded to ready for sale, increase user engagement.

Role

Senior Product Designer

Ideation, UX thinking, Usability testing, Rapid prototyping

Teammembers

1 x iOS fullstack developer

1 x Product Manager for 50% of the time, ended up doing both

Timescale

6 months from start of discovery to release in 2021

The challenge

Motorway products before this were solely web apps, and that included our photo web app. There were pros to this - we were not distracting sellers by having them switch platforms if they were on mobile being a big one - but there were many cons to this approach. The limitations we had by not using native camera functionality through an app meant that we could not assist sellers in taking the best possible photos of their vehicle.

I was put into a brand new team, with the role of establishing the new journey our sellers can take when profiling their vehicle. We were a team of three, the other two members being brand new to the business which meant that we had to navigate best ways of working.

The more we understood what app clips can and cannot do, make us realise that we must create a full iOS app first, therefore the MVP needed to be extended to not just the photos app clip, we must include profiling, valuation and authentication.

The Solution

We took this opportunity to really do a deep discovery into what our sellers require from us, analysing all photos taken and what the similarities are in the incorrect shots. After looking at the quality of the photos, many people are taking photos incorrectly still even with our guidance, with many even are taking photos with their fingers over the lens.

Our previous hypothesis was that users want to take all of their photos as quickly as possible, therefore making the process as simple and smooth was our priority. We now know after seeing how our sellers used the webapp that they need mandatory guidance prior on how to take the right shot, and they need to see the photo after they have taken it to ensure that the photo is good quality (not blurry, good lighting, etc). Our prior focus on getting the seller through as quickly as possible meant that they would have to retake nearly all their shots - this certainly proved our hypothesis incorrect so we shifted to slowing them down and allowing them to focus more on how to get it right, aiming to decrease the time spent from offer select to ready for sale.

In order to create our desired app clip, we had to create a full app too. This meant that we needed to widen the scope to include auth and the profiling journey, but knowing that we were focusing on the app clip rather than the full app, we did not want to redo the profiling journey as a whole but rather to simply lift and shift it.

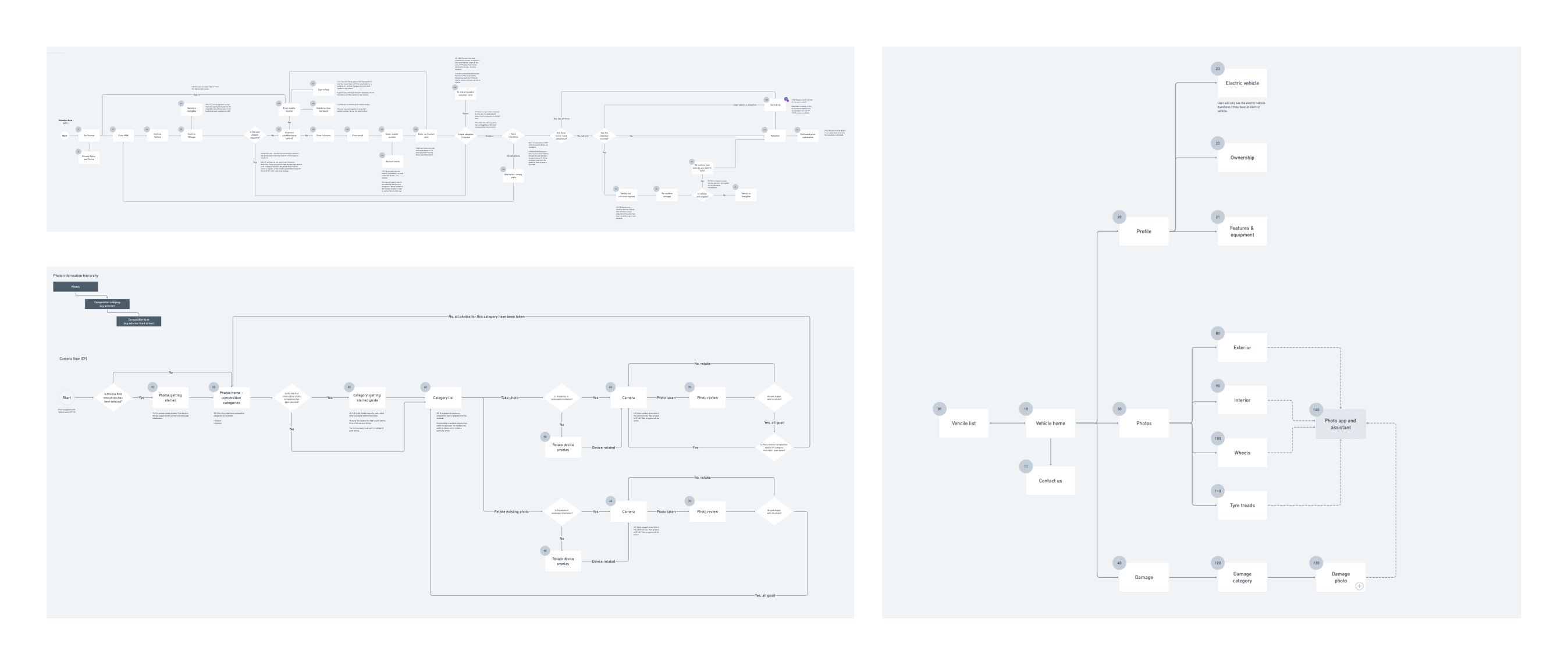

a few of the user flows that were created for the app, and app clips. This was created with the developer involved so that any technical limitations were considered. The numbers were added to each point with a visual for the user, so we can reference it in stakeholder reviews, and the wireframes.

The benefits of going down the app clip route meant that we wouldn’t have to take the user away from the selling process to the app store, which was too big of a risk for the business to take. This route requires a lot more additional work for us, however. We needed to flesh out the journey of the user arriving to the point of taking photos, but as we know that more often than not the photos need retaking, we need sellers to be able to rejoin the app clip with all of their information stored.

This is where our not so little edgecases slipped in, what about if they then switched and continued via the web app rather than the app clips or full app? What about if the user deleted the app and then went to app clip? It would have been quite the headache to solve solo, so the developer working on this and I sat together and mapped out the journey in full, starting from in depth user flows for each section of the app, highlighting what the backend will do at each stage.

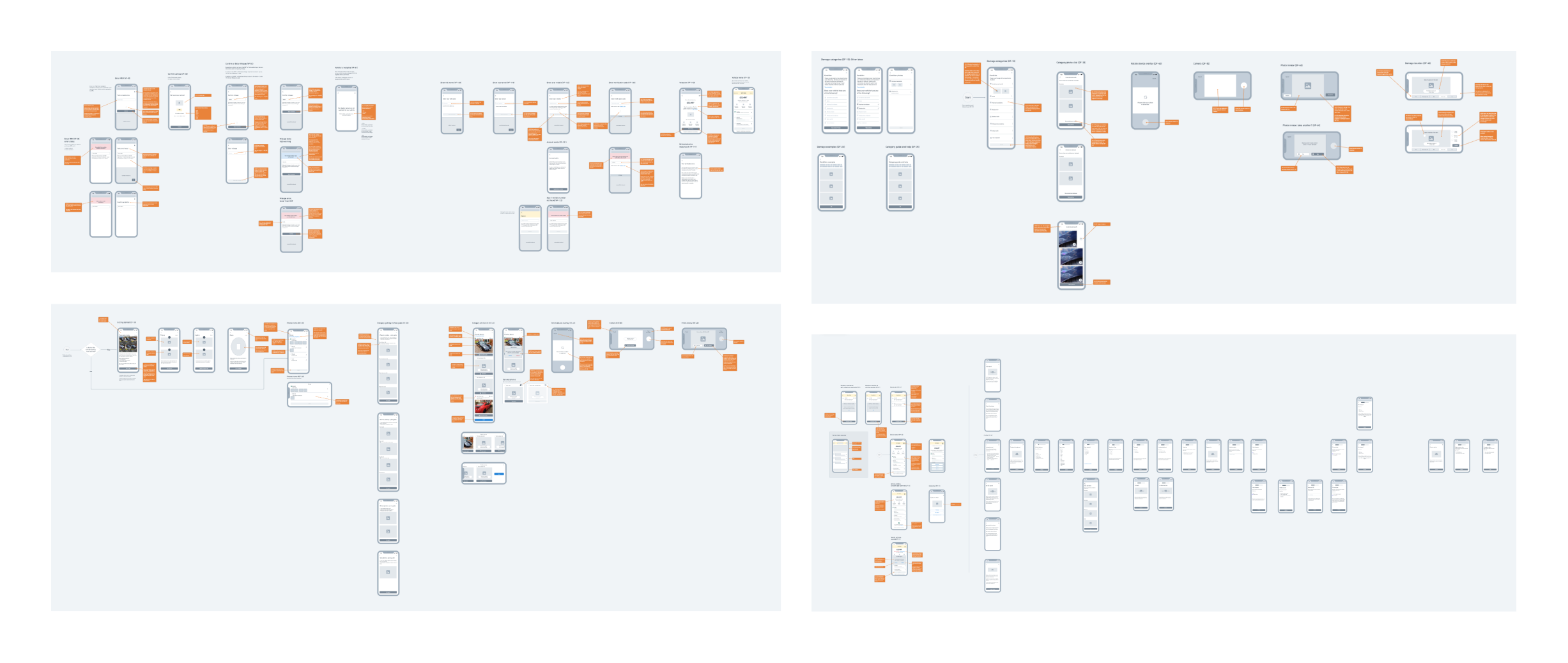

A breakdown of the wireframes created for each section of the app. This was created using Whimsical, and annotated for the developer I was working alongside, making sure that we were aligned and I could design what is technically possible.

Once we were at the stage of near low fidelity designs, we tested with users some solutions that we proposed we had to see if there was a way to utilise native components rather than start from scratch, we wanted to see if users would be able to understand the way in which we were intending to use it, before we created a lot of unnecessary work in development creating brand new components, when toggles etc sufficed.

As we needed to get this MVP out to see how users feel about it, and we could start gathering the high quality images to later include machine learning, we could not add too much in the way of image quality enhancement. One thing that was a non negotiable for us was the use of the device accelerometer to make sure that the seller was taking level photos. We wanted to make it so that users become familiar with seeing alerts in the shot, and now to slow down to pay attention in what they’re taking. We found this to be incredibly successful, forcing them to look at the shot and can only take the photos when fully level.

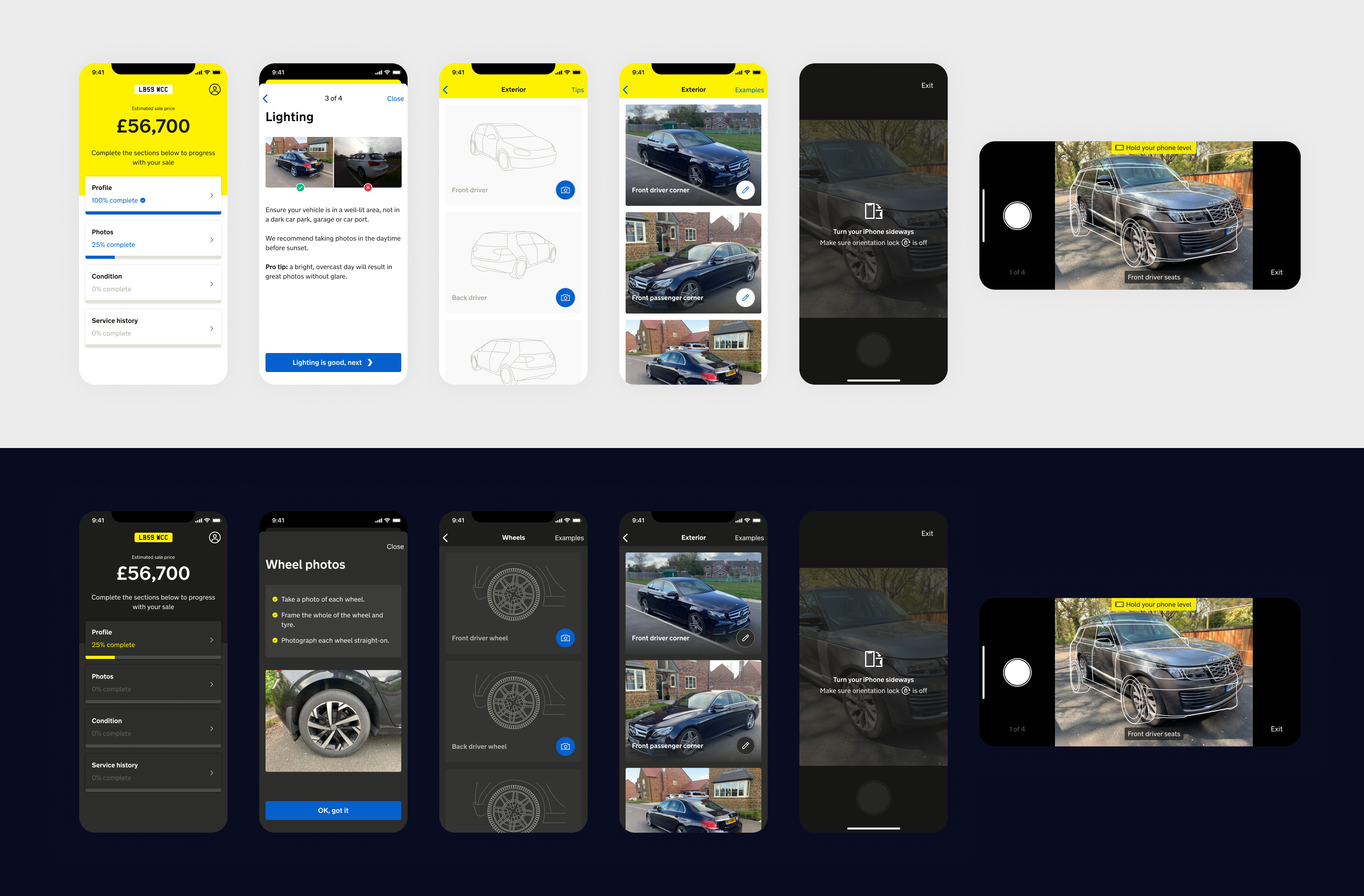

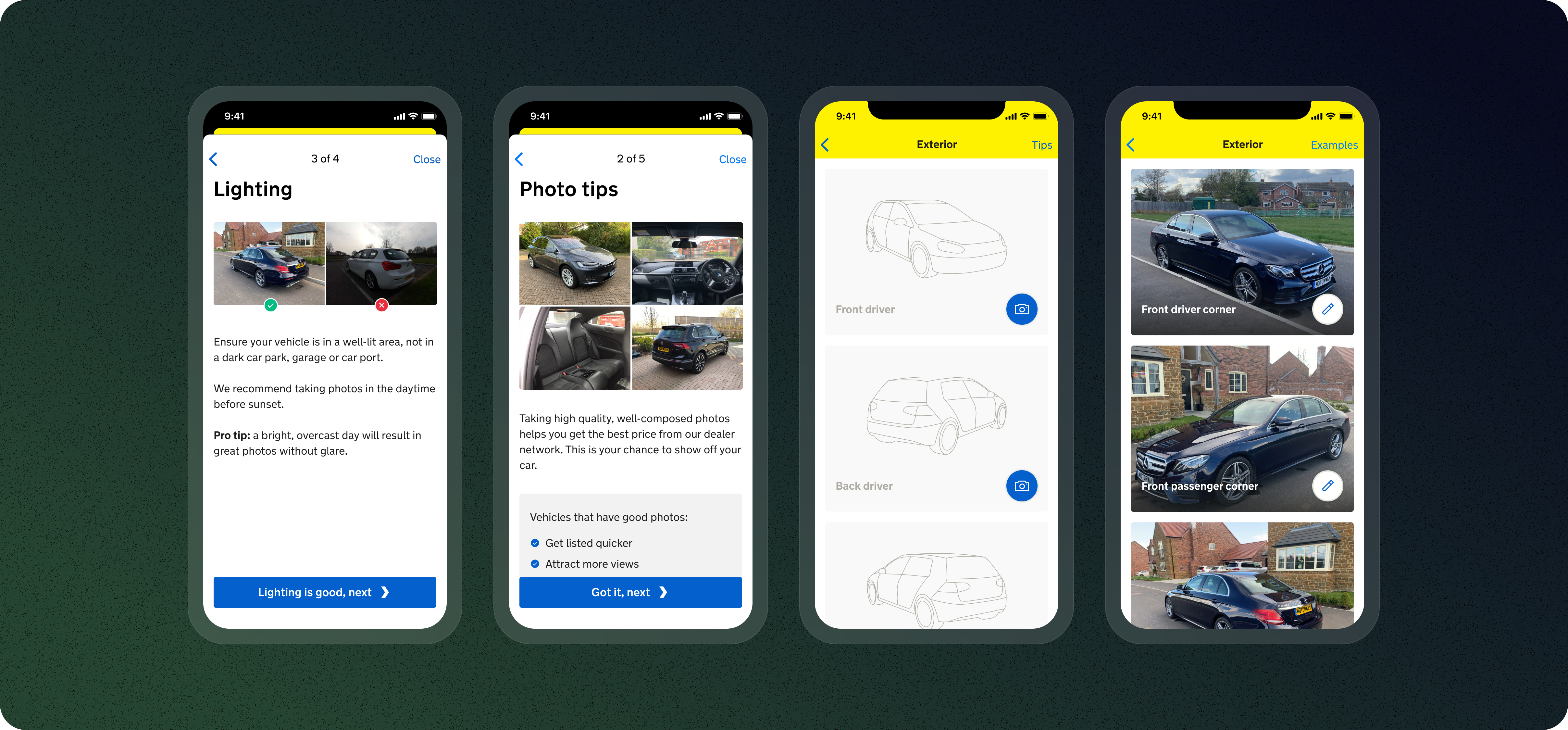

The first two visuals here are the mandatory view once modal sheets for each section of the photo app. The second two are the photo gallery list where the photos will be displayed, with the option to view examples again.

The main purpose of the app, for the sellers to take the best possible photos. You can see here that we instruct the users to rotate their devices, and also hold their phone level if it is showing that they aren't. The vehicle outline shows them where the car should sit for the best angle.

Results & metrics

As we now are using the native camera functionality rather than the browser video option, we can safely say that the quality of the photos has increased significantly, which instantly has helped our dealers bid with confidence as they became able to zoom in on the vehicle to view possible imperfections clearly.

The next steps for us was to improve the guidance around the photo taking at the right stage, gathering high quality vehicle images for the machine learning squad to which we eventually rolled out.

We have noted that users have a split between iOS, app clip, web app and the later released android app. We are looking to depreciate the web app after the successes of the apps, knowing now that users have a 20% higher offer select to ready for sale.